Chris BaraniukTechnology reporter

Washington Post via Getty Images

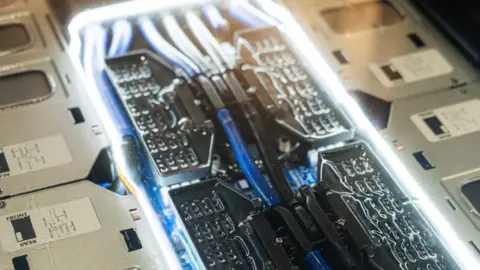

Washington Post via Getty ImagesThey run 24 hours a day, 7 days a week at high speeds and get hot, but data center computer chips get a lot of work. Some of them mainly live in the spa.

“We'll have a liquid that rises and [then] “Rain or drip onto the component,” says Jonathan Ballon, chief executive of liquid cooling company Iceotope. “Some things will get sprayed.”

In other cases, the industrious gizmos recline in circulating baths of liquid, which removes the heat they generate, allowing them to run at very high speeds, called “overclocking.”

“We have customers who overclock their computer all the time because the risk of the server burning out is zero,” says Mr. Ballon. He adds that one client, a US hotel chain, plans to use the heat from the hotel's servers to heat the hotel's rooms, laundry and pool.

Without cooling, data centers collapse.

In November, a cooling system failure at a US data center knocked out financial trading technology at CME Group, the world's largest exchange operator. Since then the company has implemented additional cooling capacity to help protect against a recurrence of this incident.

Currently, the demand for data centers is growing rapidly, thanks in part to the development of artificial intelligence technologies. But huge amount of energy and the water that many of these sites consume means they are becoming increasingly controversial.

More than 200 environmental groups The US recently demanded a moratorium on the construction of new data centers in the country. But there are some data center companies that say they want to reduce their impact.

They have another incentive. Data center computer chips are becoming more powerful. So much so that many in the industry say that traditional cooling methods, such as air cooling, in which fans constantly blow air over the hottest components, are no longer sufficient for some operations.

Mister Ballon knows about growing controversy around the construction of energy-intensive data centers. “Communities are resisting these projects,” he says. “We need significantly less electricity and water. We don’t have fans at all – we work silently.”

icetop

icetopIceotope says its approach to liquid cooling, which can cool multiple components in a data center, not just processor chips, can reduce cooling-related power consumption by up to 80%.

The company's technology uses water to cool an oil-based fluid that actually interfaces with computer technology. But the water remains in a closed cycle, so there is no need to constantly take it from local sources.

I ask if the oil-based fluids in the firm's cooling system are derived from fossil fuel products, and he says some are, although he stresses that none contain PFAS. also known as forever chemicalswhich are harmful to human health.

Some data center liquid cooling technologies use refrigerants containing PFAS. Not only that, but many refrigerants produce very potent greenhouse gases that threaten to worsen climate change.

Two-phase refrigeration systems use such refrigerants, says Yulin Wang, a former senior technology analyst at IDTechEx, a market research firm. The refrigerant starts out as a liquid, but heat from the server components causes it to evaporate into a gas, and this phase change absorbs a lot of energy, meaning it is an efficient way to cool.

In some designs, data center equipment is completely immersed in large amounts of PFAS-containing refrigerant. “Vapors can escape from the tank,” Mr. Wang adds. “There may be some safety issues.” In other cases, the coolant is supplied directly to only the hottest components—the computer chips.

Some companies offering two-phase refrigeration are now switching to PFAS-free refrigerants.

Yulin Wang

Yulin WangOver the years, companies have experimented with vastly different approaches to cooling, trying to find better ways to keep gadgets running in data centers.

Microsoft is known flooded a tubular container for example, there are a lot of servers in the sea off the Orkney Islands. The idea was that the cold Scottish seawater would increase the efficiency of the air cooling systems inside the device.

Last year, Microsoft confirmed that it had killed the project. But the company learned a lot from it, says Alistair Spears, general manager of global infrastructure in Microsoft's Azure business group. “Without [human] operators, there are fewer problems happening – this affects some of our operating procedures,” he says. Data centers that are more hands-off appear to be more reliable.

Initial results showed that the undersea data center had a power usage efficiency, or PUE, rating of 1.07, suggesting it was much more efficient than the vast majority of land-based data centers. And this did not require water.

But Microsoft eventually concluded that the economics of building and maintaining undersea data centers weren't very favorable.

The company is still working on liquid cooling ideas, including microfluidics, where tiny channels of liquid pass through multiple layers of silicon chip. “You can imagine a labyrinth of liquid cooling through silicon at the nanometer scale,” says Mr. Spears.

Researchers are putting forward other ideas.

In July, Renkun Chen of the University of California, San Diego and colleagues published an article detailing his idea for a cooling technology based on filled-pore membranes that could help cool chips passively—without the need to actively pump liquid or blow air.

“Essentially, you use heat to provide pumping power,” says Professor Chen. He compares this to the process of water evaporating from tree leaves, causing a pumping effect that draws more water up through the plant's trunk and along its branches to replenish the leaves' reserves. Professor Chen says he hopes to commercialize the technology.

New ways to cool data center technologies are increasingly in demand, says Sacha Luccioni, head of artificial intelligence and climate at Hugging Face, a machine learning company.

This is partly due to demand for AI, including generative AI or large language models (LLMs), which are the systems on which chatbots run. In previous studiesDr. Luccioni showed that such technologies consume a lot of energy.

“If you have very power-hungry models, then the cooling needs to be increased,” she says.

Models of reasoningthat explain results in multiple steps are even more demanding, she adds.

They use “hundreds or thousands of times more energy” than standard chatbots that simply answer questions. Dr Luccioni is calling for more transparency from artificial intelligence companies about how much energy their various products consume.

For Mr Ballon, LLMs are just one form of AI, and he argues that they have already “reached their limit” in terms of productivity.